Here in Canada, the last day of September marked the first National Day of Truth and Reconciliation, to commemorate “tragic and painful history and ongoing impacts of ‘residential schools.’” My kids wore orange to school, and we had a lot of books and discussion about what these institutions were and why they were wrong.

It’s remarkable how, in explaining these hard concepts to 3- and 5-year olds, it becomes immediately clear that the label “schools” is just the wrong thing to focus on. Sure, my children are discomfited by the haircutting and physical abuse and insufficient food. But all of their questions and concern and indignation is rooted in the most horrific aspect: children, as young as four, taken away, by force, from their mothers and fathers. They immediately see that the “school” part is almost beside the point. The forced separation of parents and children was cruel and inhumane, and would have been wrong even if the children at these institutions didn’t die at horrifying rates1.

Labels matter, and the word “school” has powerful framing effects. In calling them “residential schools” we endow a profound moral wrong with undeserved positive connotations like growth, learning, expanding horizons. Using the word “school,” allows us to sugarcoat these institutions as places of good intention where bad things happened. It allows us to ignore the true extent of the atrocity and avoid accountability.

What we call “residential schools” were nothing less than state-sponsored mass abductions. None of this is to minimize the appalling treatment the children suffered while at these institutions, or their explicit objective to eradicate Indigenous language and culture. But persisting in the “school” label occludes both the historic wrong and the ways we continue to inflict the same injustice on Indigenous parents and children. By adopting language that centres the forced separation of children and parents, we make it harder to persist in comfortable ignorance, and lay bare a systemic human rights violation that was perpetrated on Indigenous people for more than a century.

How does machine learning shape motivation at work?

Over the past month, I’ve been thinking a lot about the interplay of machine learning2 and organizational behaviour. Up to now, most workplace machine learning applications make what I think of as passive predictions. These are use cases like automatic transcription where the machine learning algorithm is helping you out, but you as the human are the arbiter of right and wrong. The machine learning prediction isn’t prompting you to any particular action, aside from perhaps correcting mistakes. These passive prediction systems are basically benign and shouldn’t be very different from a non-AI software system.

On the other hand, we have what I think of as prompting predictions. We see these a lot in consumer applications — your streaming service recommends a show you might like, your maps app suggests a faster route given current traffic, your social media feed surfaces posts it thinks you will engage with. These kinds of predictions are meant to prompt you to action, and the actions that you take (or don’t) are fed back into the algorithm, and you usually don’t know if the machine learning prediction was “right.” As these kinds of prompting predictions become increasingly widespread in enterprise applications, I think we’ll see some interesting (pernicious?) effects on organizational behaviour.

To implement machine learning, organizations will need to quantify a lot of things that previously were only defined qualitatively. My hypothesis is that quantification will have some unhelpful knock-on effects for employee motivation, and we should anticipate and design for those when we implement machine learning.

Quantification and employee motivation

In addition to whatever extrinsic rewards they provide, organizations are very reliant on employees’ intrinsic motivation to do great work. Edward Deci and Richard Ryan’s self-determination theory (SDT) says that intrinsic motivation is driven by our desire to satisfy three needs:

competence — controlling outcomes and experiencing mastery

autonomy — choosing actions that accord with your personal values and sense of self

relatedness — feeling connected to others and supported in pursuits

As best we can tell, quantification is helpful for motivation. Most attempts and gamification quantify some kind of score, and we use step trackers to quantify physical activity as steps, because they motivate us to be more physically active. Per SDT, it works because quantifying steps is a proxy for competence — I can see my progress more easily, which reinforces my sense that I am doing well at the task or goal. However, the interaction with autonomy and relatedness is less clear. Quantifying steps can make it easier for me to control my own path towards a goal (autonomy), and meaningfully convey my progress to others (relatedness). But it might also make me feel like I am ceding autonomy to the step tracker, or engender feelings of competition rather than connection and support.

Quantification, in other words, tends to create a very specific kind of motivation: one that over-indexes on competence, as measured by progress on a narrow set of metrics. That’s problematic for organizations, as employees tend to develop tunnel vision for the numbers and lose sight of bigger-picture goals3. For exactly that reason, organizations put a lot of thought and care into choosing the right things to measure for traditional metrics and KPIs. By carefully selecting metrics with a view to what behaviours are incentivized, organizations can mitigate the risk of behaviours that make sense for an individual pursuing a goal, even as they’re counterproductive for the organization as a whole. Unfortunately, that paradigm breaks down when it comes to machine learning.

Machine learning is quantification on steroids

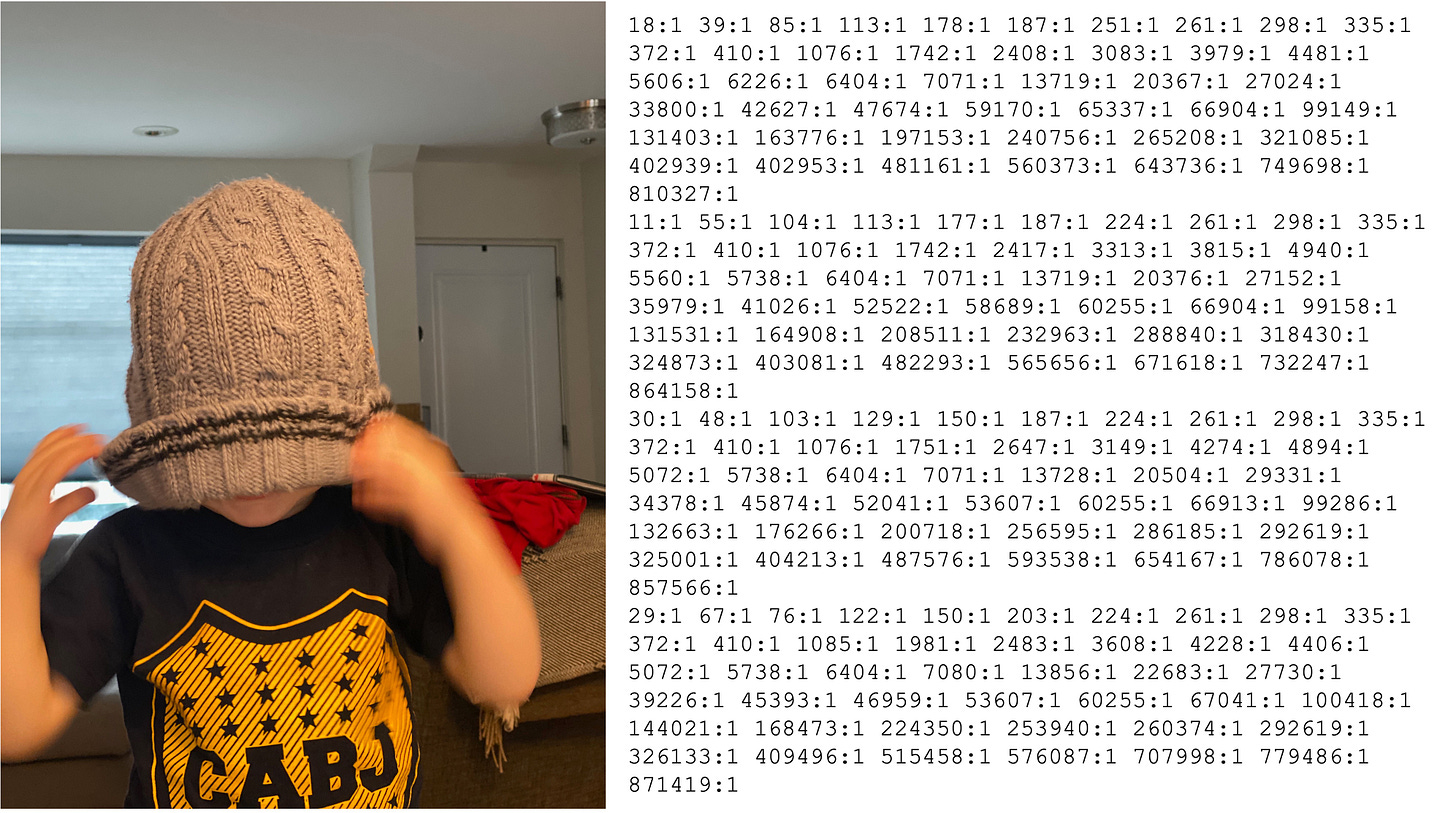

When an organization implements machine learning, the first step is choosing which features of the world to use and figuring out a way to represent them as numbers. This is not straightforward! Consider something as simple as the concept of “hats” — it’s actually encoding a lot of rich, qualitative aspects of the world that are very intuitive at a human level. Even a toddler knows that some hats keep you warm in winter and others keep you cool in summer, or that if you put your underwear on your head, it’s now a hat.

But when we capture “hats” for a machine learning model, we need to get very explicit and make choices about which features of “hatness” to represent and exactly how to quantify them as numbers. We generally try to include as many features as possible—the idea is that the machine learning algorithm is better than people at figuring out what’s important, so we should give the algorithm everything and let it decide. Our choices for numerical representation end up being driven by whatever produces the best prediction accuracy on an existing data set.

The resulting numerical representation will have a lot of numbers, but it’s almost a given that we won’t have thought of everything, and some feature of hatness will have been lost. Moreover, spurious features unrelated to hatness might get added in4. Because the numerical representation is basically impenetrable to humans, we don’t really know what features are captured or how much they affect the machine learning prediction. What we end up with is a black box and not even the person who built it really understands how the inputs relate to the predictions.

With that black box, we’ve effectively created a game for employees to play. Through the lens of SDT, the implications are clear. Employees will expend a lot of energy trying to figure out how their actions affect the algorithm, and even more energy trying to maximize their own outcomes5 against whatever features the machine learning algorithm thinks is important. Employees will be driven by their need for competence (I want to score as well as possible) and their need for autonomy (I don’t want the algorithm to control me). And they will likely engage in all kinds of spurious behaviour that is counter-productive for the organization.

The normal way we solve for misaligned incentives when we’re creating metrics is to refine the data we measure. But if humans could figure out how to refine the data, then the model and problem space probably isn’t complex enough to require machine learning in the first place. To address these challenges in a machine learning context, we can’t rely on just swapping out different self-interested goals. Instead, we need to shift employees’ motivations away from narrow self interest, and inculcate their motivation to serve group interests. The psychological literature on prosocial motivation gives us a good starting point for how we might do that.

Integrating prosocial motivation with machine learning

Prosocial motivation is when people deliberately engage in behaviours that benefit others. There are a number of intriguing possibilities6 in this space, but I’d like to focus on an idea called autonomy support, because it ties together both autonomy and relatedness, which are not as well-served by quantification.

Autonomy support7 is a concept that is completely independent of technology and machine learning. The theory comes from studying interpersonal relationships between employees and their supervisors, parents and children, teachers and students. A relationship is autonomy supportive if the person in authority does the following:

Provides a good rationale for a request (“You’ll be late for school if you don’t get ready now.”)

Allows the individual some choice in completing the request (“What do you want to do first? Brush your teeth or get dressed?”)

Conveys confidence in the individual’s abilities (“You do such a great job getting dressed all by yourself.”)

Acknowledges the individual’s feelings towards the request (“You’re really having fun with your trucks and you don’t want to stop playing.”)

In empirical studies, psychologists train supervisors/parents/teachers to be autonomy supportive and then measure outcomes. When you create an autonomy-supportive environment, prosocial motivation increases. Specifically in the workplace, we also see that autonomy support increases trust in organizations, acceptance of organizational change, and overall work satisfaction.8 So how might we implement some of those principles in a machine learning context?

Autonomy-supportive machine learning

The need to provide a rationale speaks to the importance of interpretability in machine learning models. There is some work happening on visualizing machine learning models, so we can more clearly see why a model is making a certain prediction9. To date, a lot of this work is driven by accuracy concerns — the visualizations help us identify instances where the model has learned the wrong things and might make harmful predictions. But autonomy support suggests that interpretability also helps employees apply machine learning outputs in the context of the organization’s holistic goals.

Allowing individuals some choice implies that we should avoid algorithms that return a single prediction. Rather, we should prefer to give users a list of likely possibilities and let them use their judgement. Suppose I am using machine learning to predict likely buyers for my sales team. I shouldn’t give my sales team output that says, “here is your most likely prospect.” Rather, I should output a list of high-potential prospects and let the salesperson choose which ones to pursue. Now, my employee retains some choice (and we’ve also built in a sanity check on the model’s accuracy).

This notion of giving people a list of choices implies tacit confidence in human abilities: not only am I giving you choices, but I think there is value in your discernment, over and above what the statistical models can tell us. I’ve really been influenced by Josh Lovejoy’s writing on human:AI collaboration. He argues that there are capabilities that are uniquely human, and capabilities that are uniquely AI, and we need to design products that make deliberate choices about how humans and machines will collaborate for a given problem space. If we can do that, then confidence in uniquely human abilities should be baked into the cake.

At the last, I’m skeptical about embedding empathy directly into machine learning. I suspect there is something about a computerized acknowledgement of feelings that will fall short of the mark. But I also think it would be a mistake to focus on embedding autonomy support solely into the machine learning applications. If the broader human environment is autonomy supportive, that will further bolster prosocial motivation, and help keep people focused on the big picture of the group’s goals.

What I’m Working On

I was delighted to give a guest lecture to a Digital Nova Scotia program last month, where I introduced some of my ideas on machine learning and prosocial innovation. I’m really grateful to all the participants for their questions and comments, which were so helpful to me as I refined my thinking on this topic.

I’ve also been collaborating with Rosie Yeung at Changing Lenses on a workshop we call “Radically Reimagined Recruiting.” In the workshop, we explore the meritocracy myth and the limitations of current recruiting practices, and then used human-centred design to prototype more inclusive approaches to screening job applicants. The goal is to reframe the task in terms of applicants’ present and future capabilities, rather than past education and experience. We run a version with generic job description, which has gotten a really positive reception from conferences and communities of practice. Next up, we’re hoping to run it with organizations who want to use the workshop to redesign their approach to screening, using a live job posting. Like so many HR processes, recruiting is really amenable to small-scale experiments that let organizations test out new approaches in low-risk ways. The workshop provides helpful scaffolding for making practical change.

Putting residential schools and machine learning into the same newsletter was a lot! Thanks for sticking with it…maybe next month I’ll just curate pictures of kittens. As always, I appreciate your time, and hope you found something interesting and useful to take away to your own world.

In comradeship,

S.

Mortality rates at “residential schools” were unspeakably high. In 1907, the Chief Medical Officer found that roughly 25% of all children attending “residential schools” died of TB, and that it was caused by bad ventilation and poor standards of care . This was widely known at the time — a 1907 headline from an Ottawa newspaper read “Schools Aid White Plague —Startling Death Rolls Revealed Among Indians — Absolute Inattention to the Bare Necessities of Health”

People can get pretty tied up in the semantics of what it means for an algorithm to be intelligent, whether something is machine learning or predictive analytics or whatever. I’m using the term machine learning quite broadly here, to denote any algorithm that uses large data sets to learn and then make new predictions about cases it hasn’t seen before. It shouldn’t matter what kind of machine learning approach you’re using, although if we’re talking about simple linear regressions, then I think most of the concepts I’m talking about here won’t apply.

It can be problematic for individuals too. My activity tracker seems to misinterpret laundry folding as pretty vigorous walking, which inevitably leads to less time walking and more time sitting on my couch, watching Netflix and folding clothes.

For instance, if I take lots of pictures of my children in hats because I think it’s adorable, a machine learning algorithm trained on my photos might come to think that the concept of hatness is related to the concept of children.

Often, employees can affect input data in meaningful ways. If you’ve ever had a customer service rep impress upon you the importance of giving them a 10/10 on the satisfaction survey, you’ve experienced an example of this.

Adam Grant (yes, that one) writes a lot about relational job design and how fostering connections between workers and beneficiaries of their work has positive effects on prosocial motivation. There are also some interesting theories on collectivist norms that seem applicable. But, well, this newsletter is already more than long enough.

I took a lot away from Marylène Gagné’s oft-cited 2003 article.

Basically, creating an autonomy supportive environment is a good idea irrespective of machine learning.

If you’re interested in the ML nitty gritty, this 12-minute video has some neat visualizations of generative additive models for pneumonia treatment. I also appreciated the “Transparency” chapter in Brian Christian’s book, The Alignment Problem.